Echo Explorer

Brief Of Project Concept

What it is?

VR Echo Explorer is an immersive experience where the user relies on their voice to see and progress through the world.

Our experience allows users to familiarize themselves with their voice by first hearing echoes of notes then repeating back the correct note to illuminate the world around them.

Who is it for & Why VR?

VR immerses the user in an environment that allows them to focus on their voice and familiarize themselves with musical notes they hear and produce through singing.

-

For both beginners and advanced singers

-

Visual vocal feedback - visualize your singing

-

Warm ups can be repetitive

-

Calm but visually stimulating environment

-

Less pressure to perform

My voice:

This is my first VR to co-operate with others. I decided to play the role of the Interactive VFX Designer in this project.

Why?

Personally, I am obsessed with it because its color matches my character, and VFX data can be altered and interactive with any data. Personally, I think VFX animation is also adapted to the interactive dynamic visual effects of physical body movements.

With our group version, one of the biggest reasons I would like to be a VFX interactive designer is because of our topic. We chose it due to our concept is to use the value of height and volume of sounds to interact with the visualization of lightning. In general, this is when you sing, the VFX lighting turns on your road. It's a chill-out singing experience.

I highly recommended visual effects as one of our visualisations.

Personally, with collaborative version, I wish I could be a bridge between artists and programmers. I communicate with 3D modellers and environmental designers to determine our visual tone. In the meantime, I was trying to control the VFX data through programming. After many of my attempts, me and the programmer came up with the final doable and easy way. We combine audio and visual effects.

It is necessary to add that my members cannot be summarised by force as artists or programmers. We all have creative thinking and programming thinking at the same time, but each of us has a different direction of our owner.

The first feature

I borrowed some VFX from my past to test the basic interactive: Switched by keyboard inputs : 0~9, ' A' and also I tested it in VR

Shader Graph Resetting Script

I gave it a reset function

As you seen, I made 3 defferent colours to work together.

Test mesh- based VFX( Cube)

I tried 2 different ways to add mesh position in VFX, in case it couldn't work with more complicated mesh.

Point Cache

Position( Mesh)

The Progress- Explore how to code VFX

Reset VFX Scale to imitate the sound wave

I was not sure how to control VFX value. We wish to achieve VFX more like the sound wave.

I was suddenly inspired by resetting script of shader graph; I can also reset scale of VFX to make it like the sound wave. I update it to be a dynamic loop, not directly resetting like shader graph.

The visualization looked better, but it would be a question to interactive with sound value.

I

Just was exploring pure value

After succeeded in interactive with the pure value, I started to explore VFX Property, was trying to find more interactive possibilities in case we want more.

Add mesh now~

Welcome visitors to your site with a short, engaging introduction.

Double click to edit and add your own text.

Test interactive with mesh- based VFX

with a free asset:

Public new float value

It was a dynamic interactive.

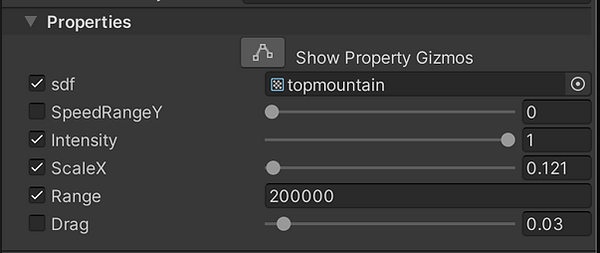

Public which value we control in VFX. Make sure: we want the ' range' or the ' default' .

Choose which value we control in properties.

Give it a script, generally I can say I achieved my original goal, I did interactive outputs, VFX value can be easily controlled by any input data now.

A reflection from Mark: the slider was not nesessary, later he added mathematic to interactive with sound inputs data.

標題 5

Started to work on 3d meshes

created by Rianna, Elin

Now I still can say I was totally dizzy at the moment; Everything developed well until we organized meshes and VFX together; Basically, some of meshes' position of pointCache was dark. It means I can't use the past progress to finish VFX based- on Mesh.

I suddenly realized I just met the biggest issue. We had no too much time any more. I couldn't figure out ' why', I found the reason in my version, but every artist's creative progress is different. Literally they might not have any error in ' their' progress. After a great communication, I made a decision as soon as possible: I learnt SDF VFX to move our process forward right now!

VFX demo

Here is an additional feature:

Voice Recognition

' Stop! Time!'

I designed this hopefully with the speech recognition: when you say ' stop' , VFX can top! The animation was controlled by the value of which I know. Looking forward to develop it in the future.

It can be based on any mesh also.

Here are a couple of additional features:

I know we had to make sure our scene not too much crazy.

I will use them later.

I think the bridge VFX ( between the green one and blue one) could be able to become a guidance for users to tell them where they can go( Literially I applied this as soon as possible in my AR work;

I think if VFX( like the big pink one) started from a bigger mesh, stopped when it came to the mesh, it could light the surrounding , draw the attention of the audience and finally put it in the center.

We quickly agreed that we kept simple VFX because it was a bit too much in this case.

I am grateful for the group collaboration because I can have time to explore the frantic edge after developing basic arts and programming.

Happy that I was working on several projects at the same time.